1) 删除默认仓库(如果存在)

[snow@openshift ~]$ oc logout

[snow@openshift ~]$ oc login -u system:admin

[snow@openshift ~]$ oc project default

[snow@openshift ~]$ oc get pods

NAME READY STATUS RESTARTS AGE

docker-registry-1-7fhl2 1/1 Running 0 18m

......

......

[snow@openshift ~]$ oc describe pod docker-registry-1-7fhl2 | grep -A3 'Volumes:'

Volumes:

registry-storage:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

Medium:

[snow@openshift ~]$ oc delete all -l docker-registry=default

pod "docker-registry-1-h2cdr" deleted

replicationcontroller "docker-registry-1" deleted

service "docker-registry" deleted

deploymentconfig.apps.openshift.io "docker-registry" deleted

[snow@openshift ~]$ oc delete all -l name=registry-console

pod "registry-console-1-2cg24" deleted

replicationcontroller "registry-console-1" deleted

service "registry-console" deleted

deploymentconfig.apps.openshift.io "registry-console" deleted

[snow@openshift ~]$ oc delete serviceaccount registry

serviceaccount "registry" deleted

[snow@openshift ~]$ oc delete oauthclients cockpit-oauth-client

oauthclient "cockpit-oauth-client" deleted

# 如果存在registry-registry-role也需要删除

[snow@openshift ~]$ oc delete clusterrolebindings registry-registry-role

clusterrolebinding.authorization.openshift.io "registry-registry-role" deleted

[snow@openshift ~]$ oc get pods

NAME READY STATUS RESTARTS AGE

router-1-rq6k2 1/1 Running 0 20h

2) 配置仓库

(1) 验证主机状态

[snow@openshift ~]$ oc get nodes

NAME STATUS ROLES AGE VERSION

computenode1.1000cc.net Ready compute 20h v1.11.0+d4cacc0

computenode2.1000cc.net Ready compute 20h v1.11.0+d4cacc0

computenode3.1000cc.net Ready infra 11h v1.11.0+d4cacc0

openshift.1000cc.net Ready infra,master 20h v1.11.0+d4cacc0

(2) 为镜像创建保存目录

[snow@openshift ~]$ ssh cn1 "sudo mkdir /var/lib/origin/registry"

[snow@openshift ~]$ ssh cn1 "sudo chown snow. /var/lib/origin/registry"

(3) 账户赋权

[snow@openshift ~]$ oc adm policy add-scc-to-user privileged system:serviceaccount:default:registry

scc "privileged" added to: ["system:serviceaccount:default:registry"]

(4) 部署仓库

[snow@openshift ~]$ sudo oc adm registry \

--config=/etc/origin/master/admin.kubeconfig \

--service-account=registry \

--mount-host=/var/lib/origin/registry \

--selector='kubernetes.io/hostname=computenode1.1000cc.net' \

--replicas=1

--> Creating registry registry ...

serviceaccount "registry" created

clusterrolebinding.authorization.openshift.io "registry-registry-role" created

deploymentconfig.apps.openshift.io "docker-registry" created

service "docker-registry" created

--> Success

[snow@openshift ~]$ oc project default

Now using project "default" on server "https://openshift.1000cc.net:8443".

[snow@openshift ~]$ oc get pods

NAME READY STATUS RESTARTS AGE

docker-registry-1-fp5kw 1/1 Running 0 26s

router-1-rq6k2 1/1 Running 0 20h

[snow@openshift ~]$ sudo oc describe pod docker-registry-1-fp5kw

Name: docker-registry-1-fp5kw

Namespace: default

Priority: 0

......

......

Normal Created 48s kubelet, computenode1.1000cc.net Created container

Normal Started 47s kubelet, computenode1.1000cc.net Started container

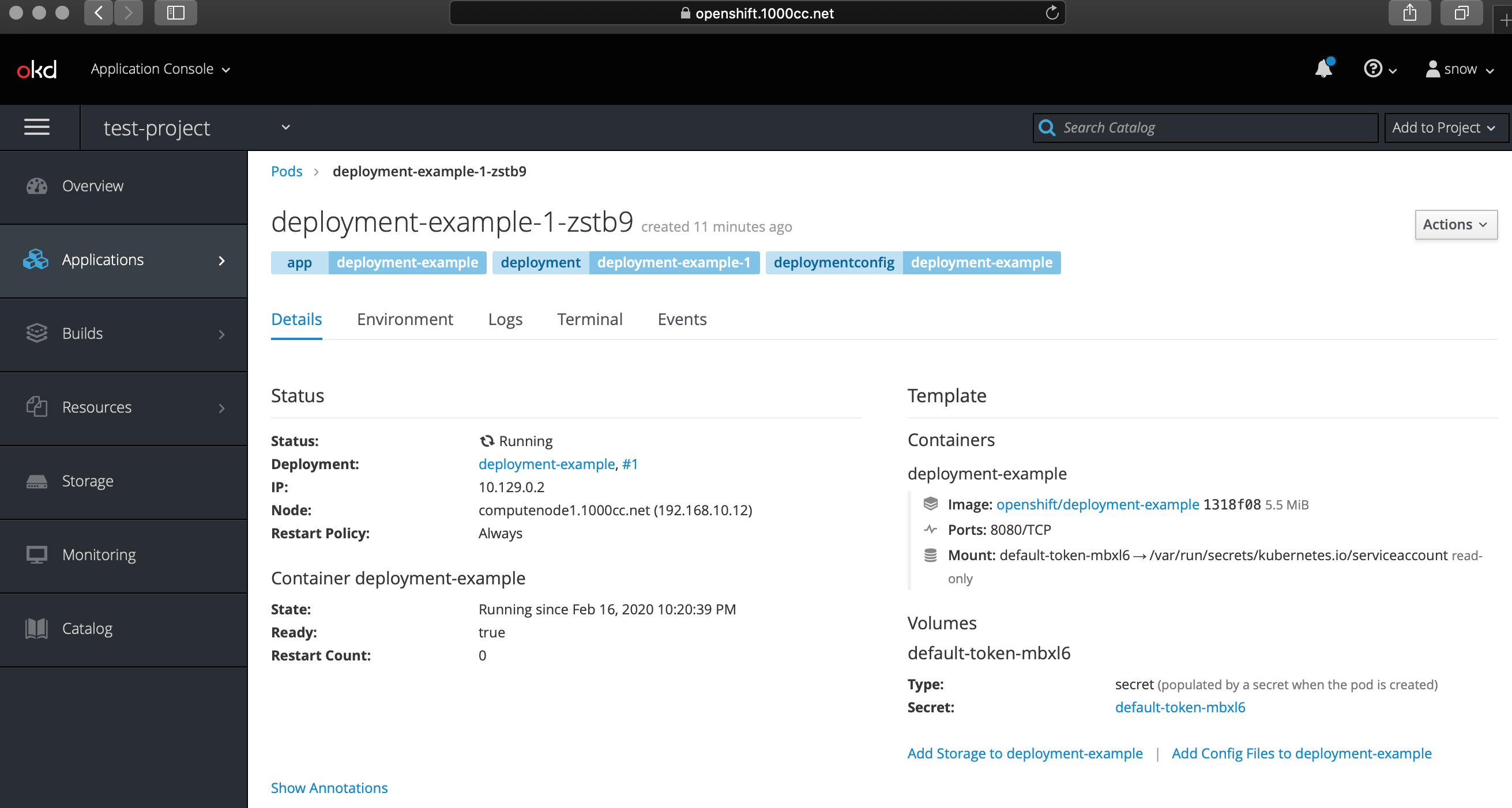

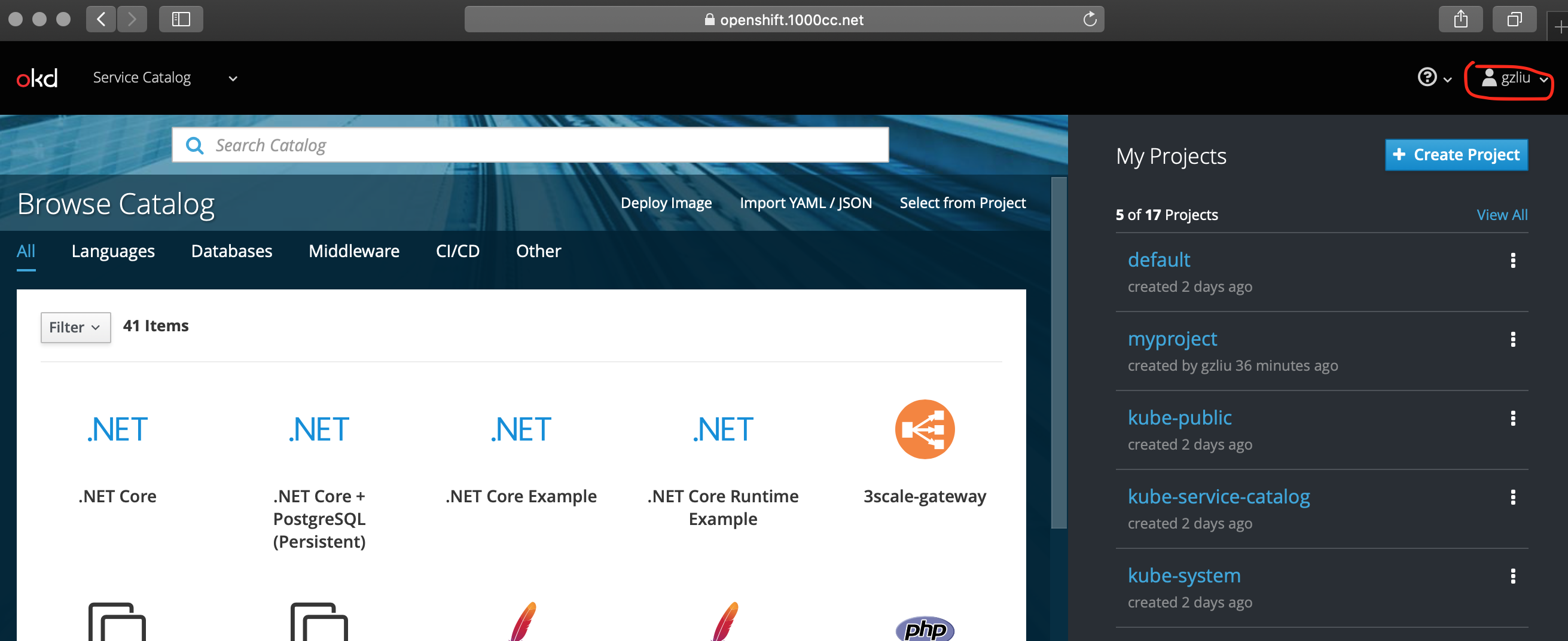

(5) 测试

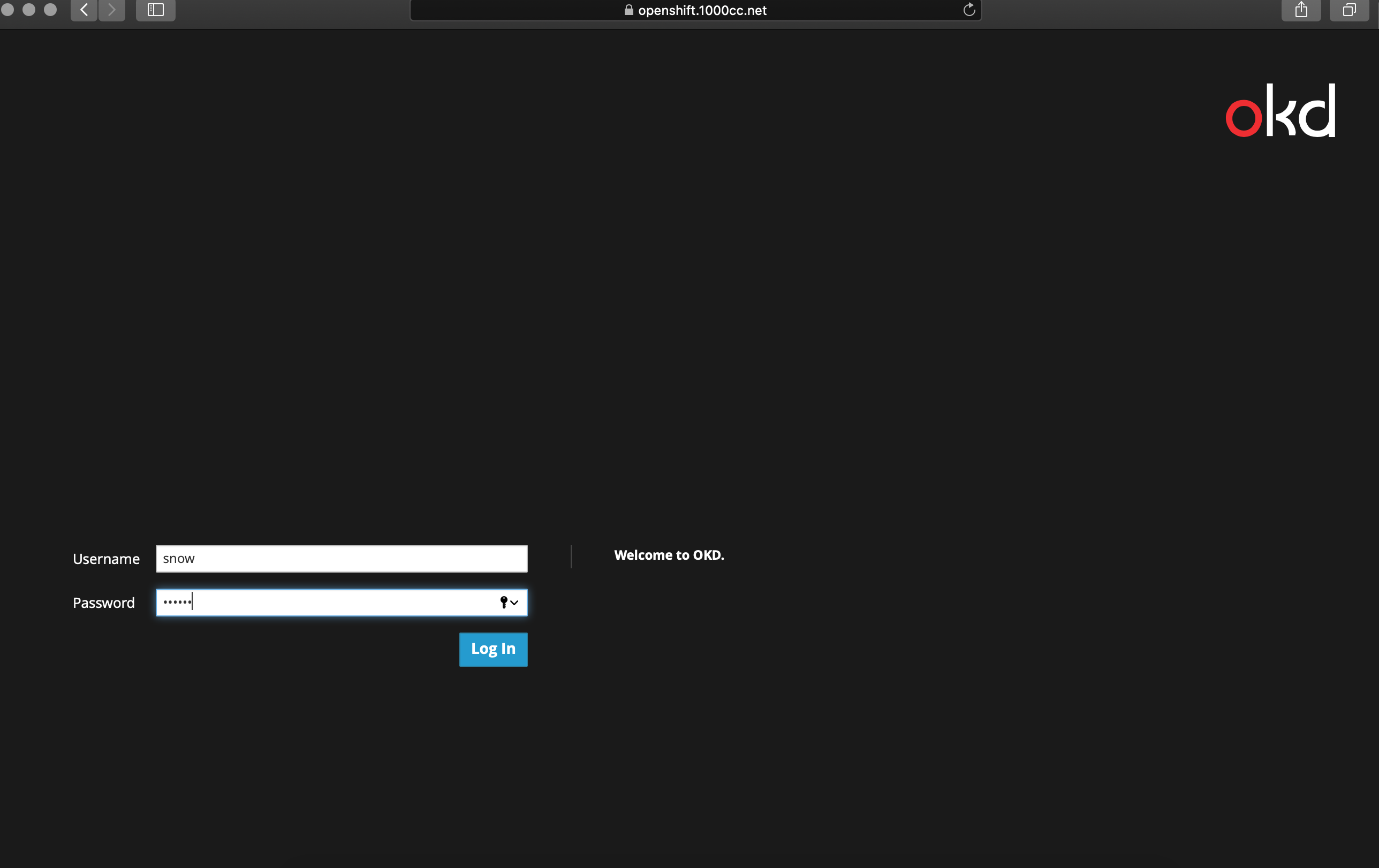

[snow@openshift ~]$ oc login

Authentication required for https://openshift.1000cc.net:8443 (openshift)

Username: lisa

Password:

Login successful.

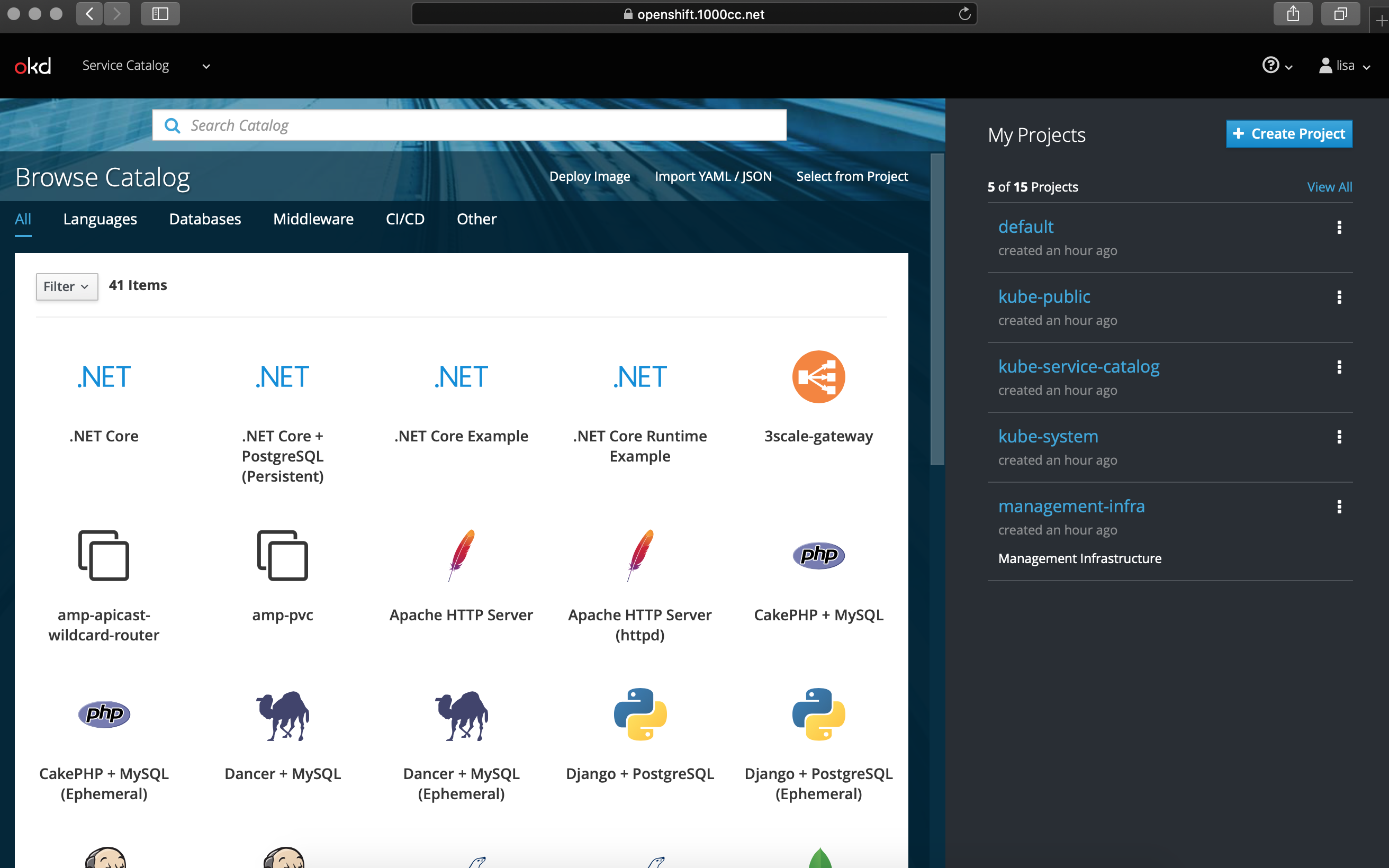

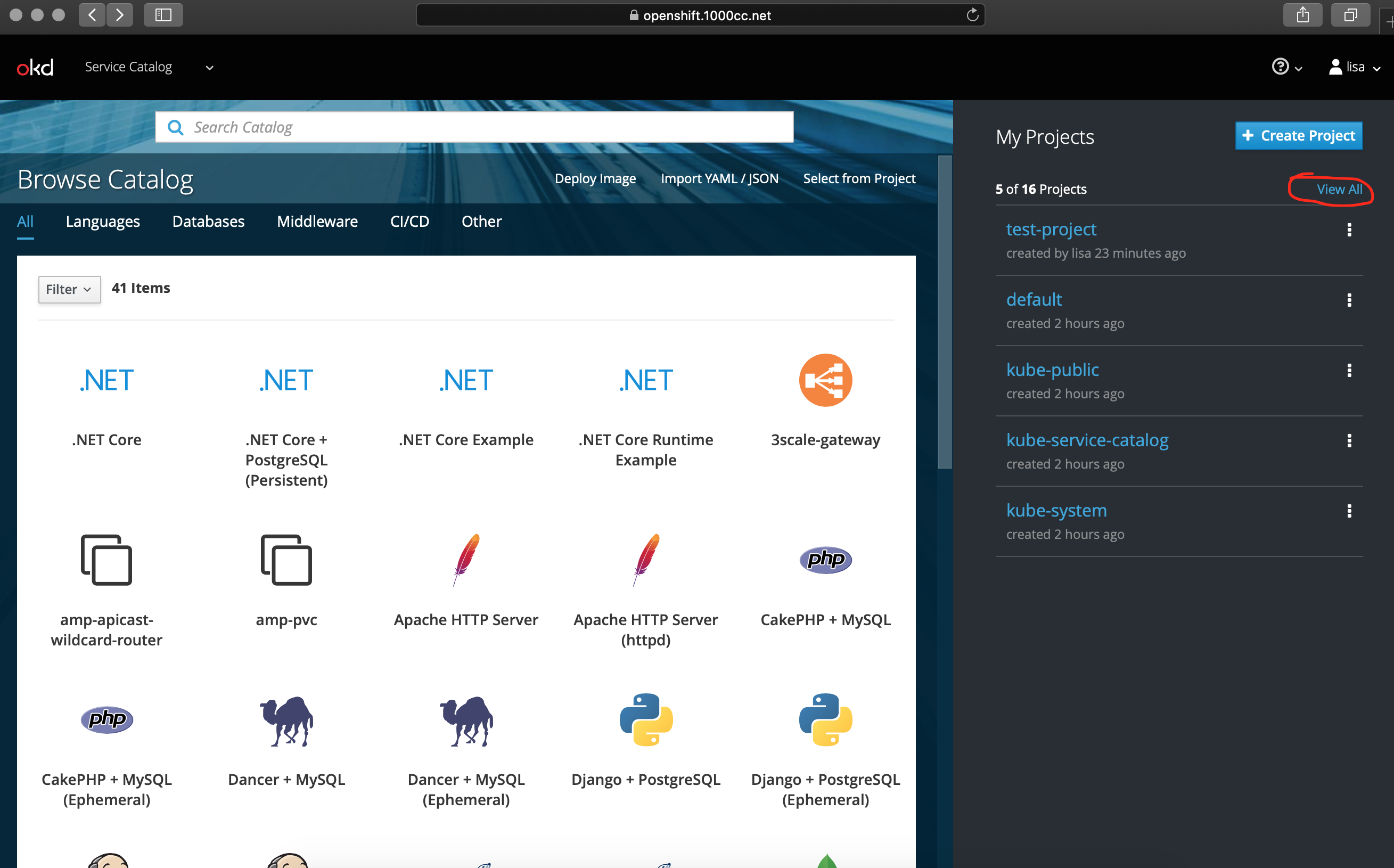

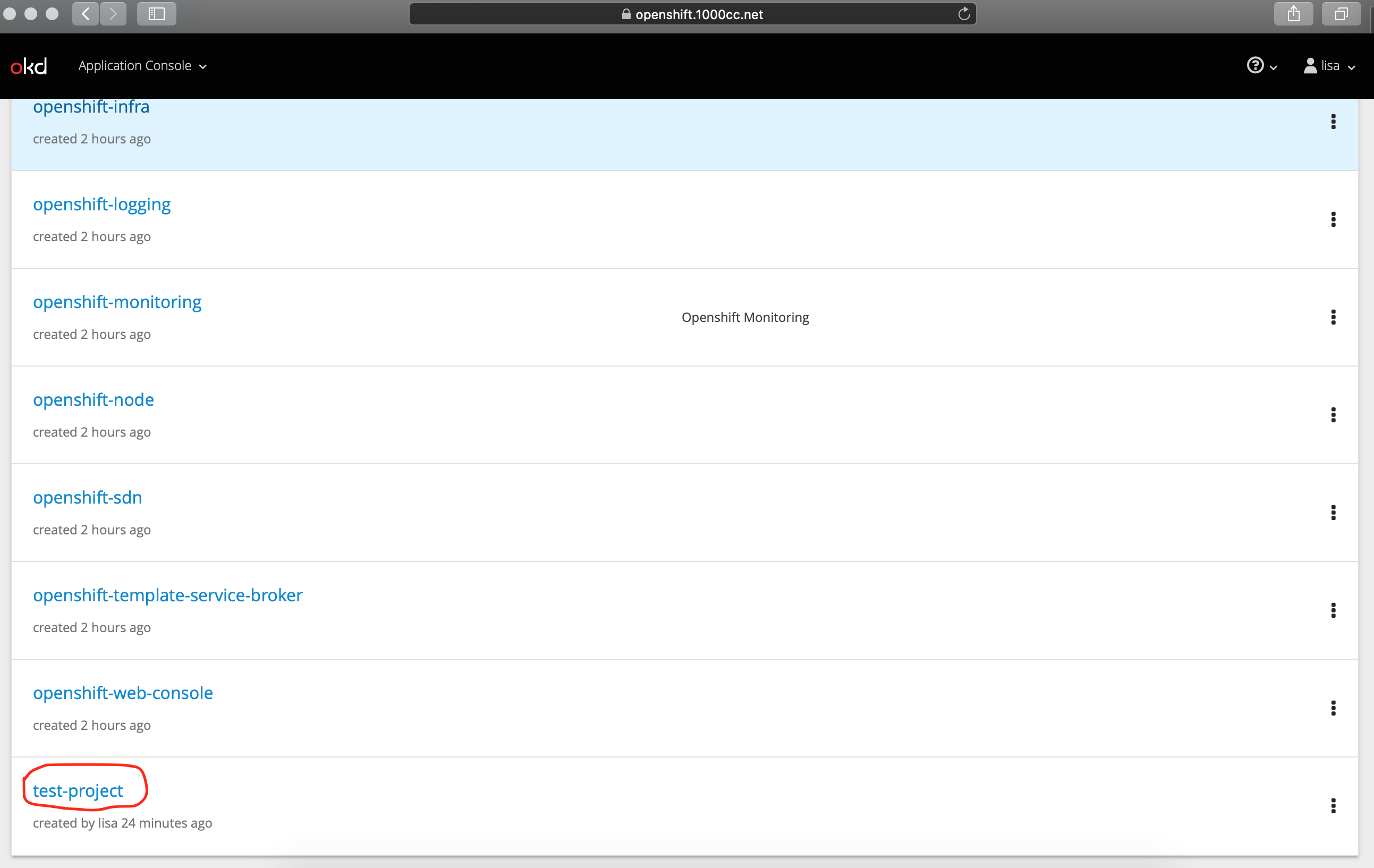

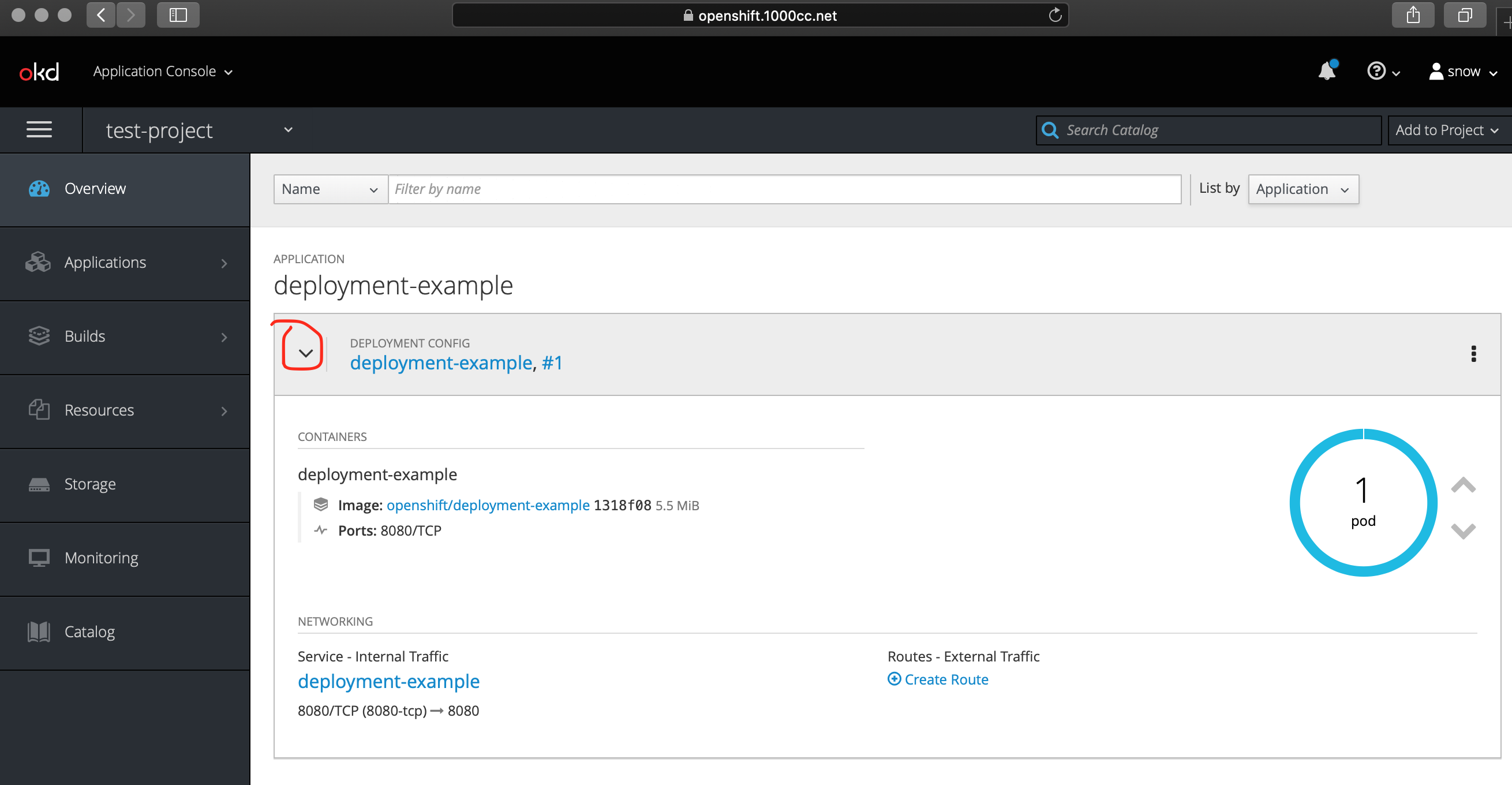

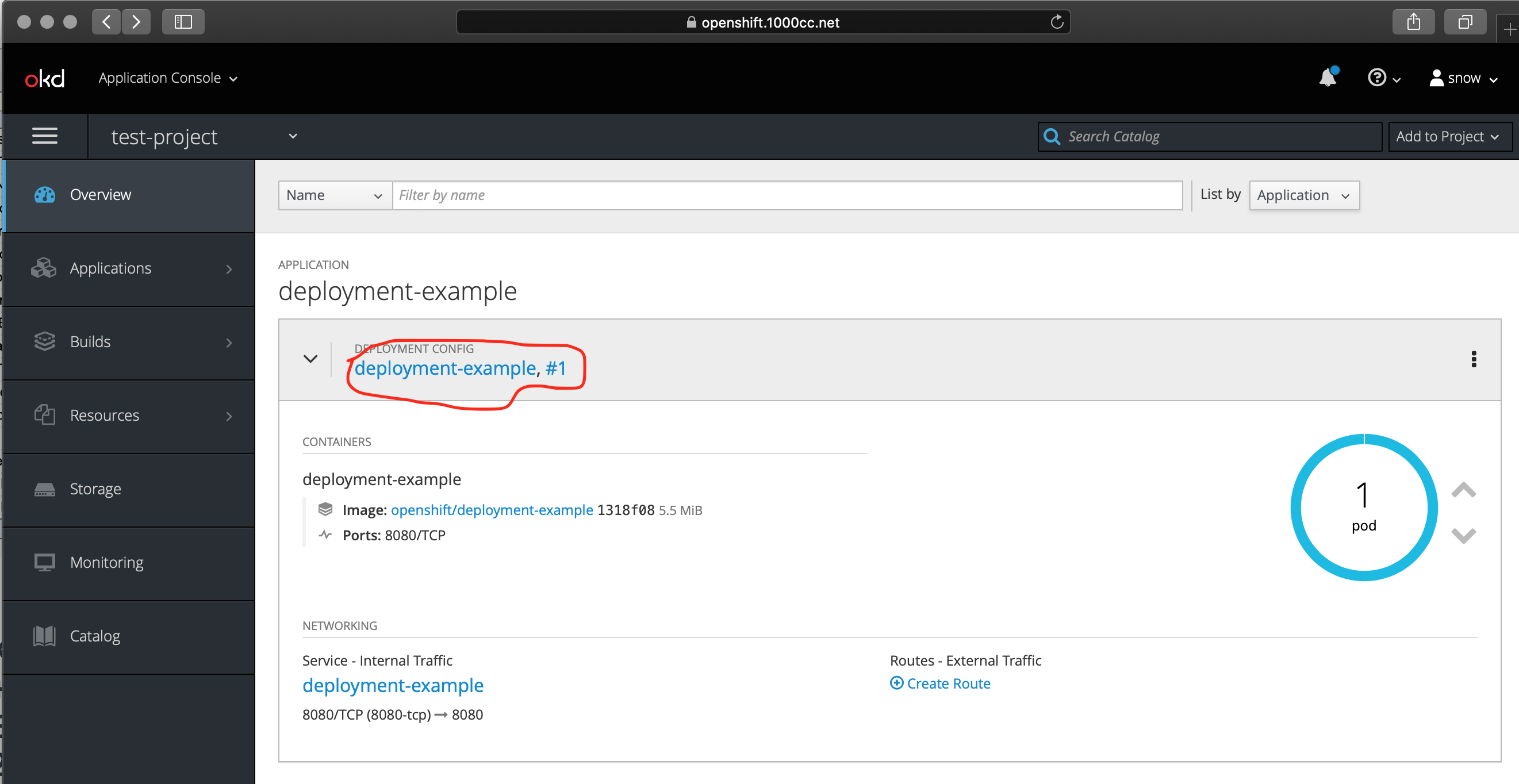

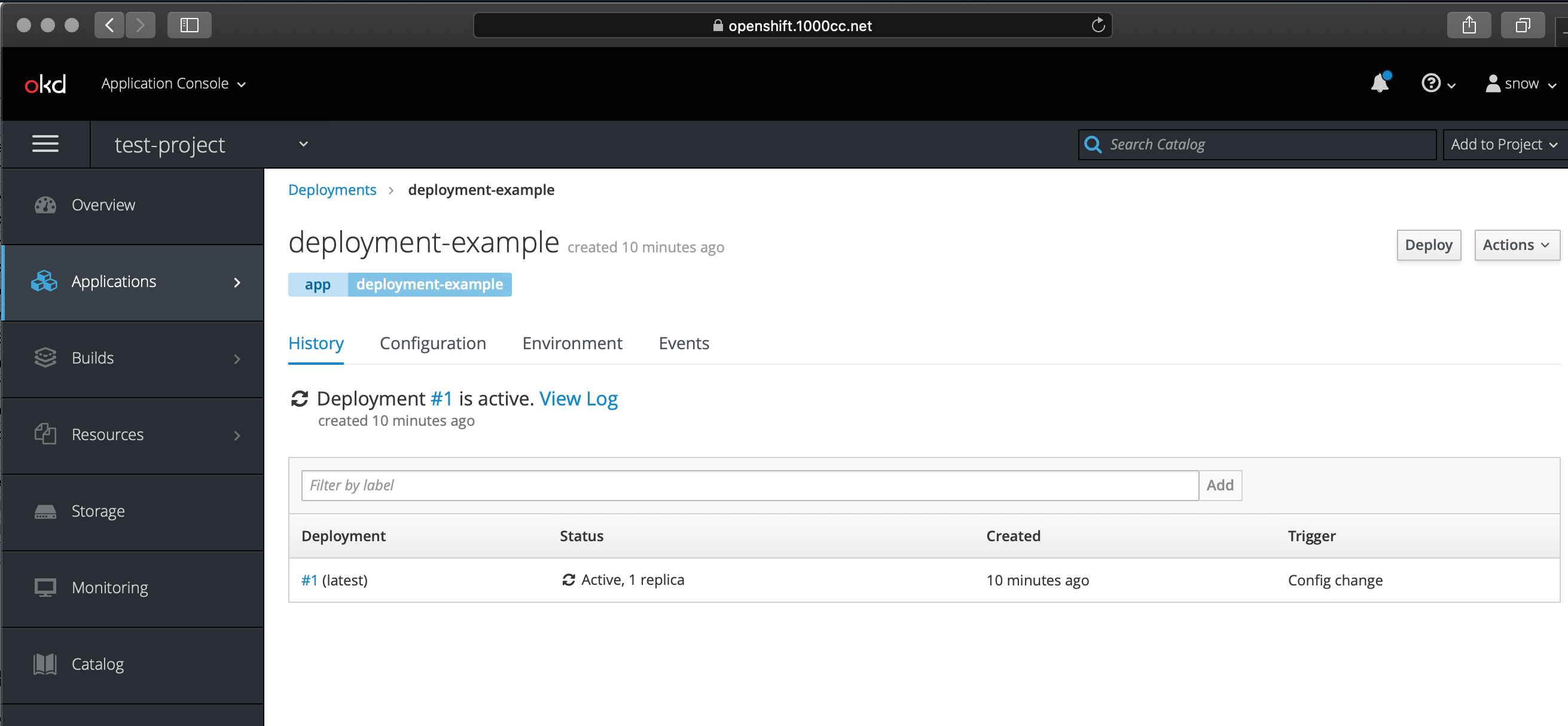

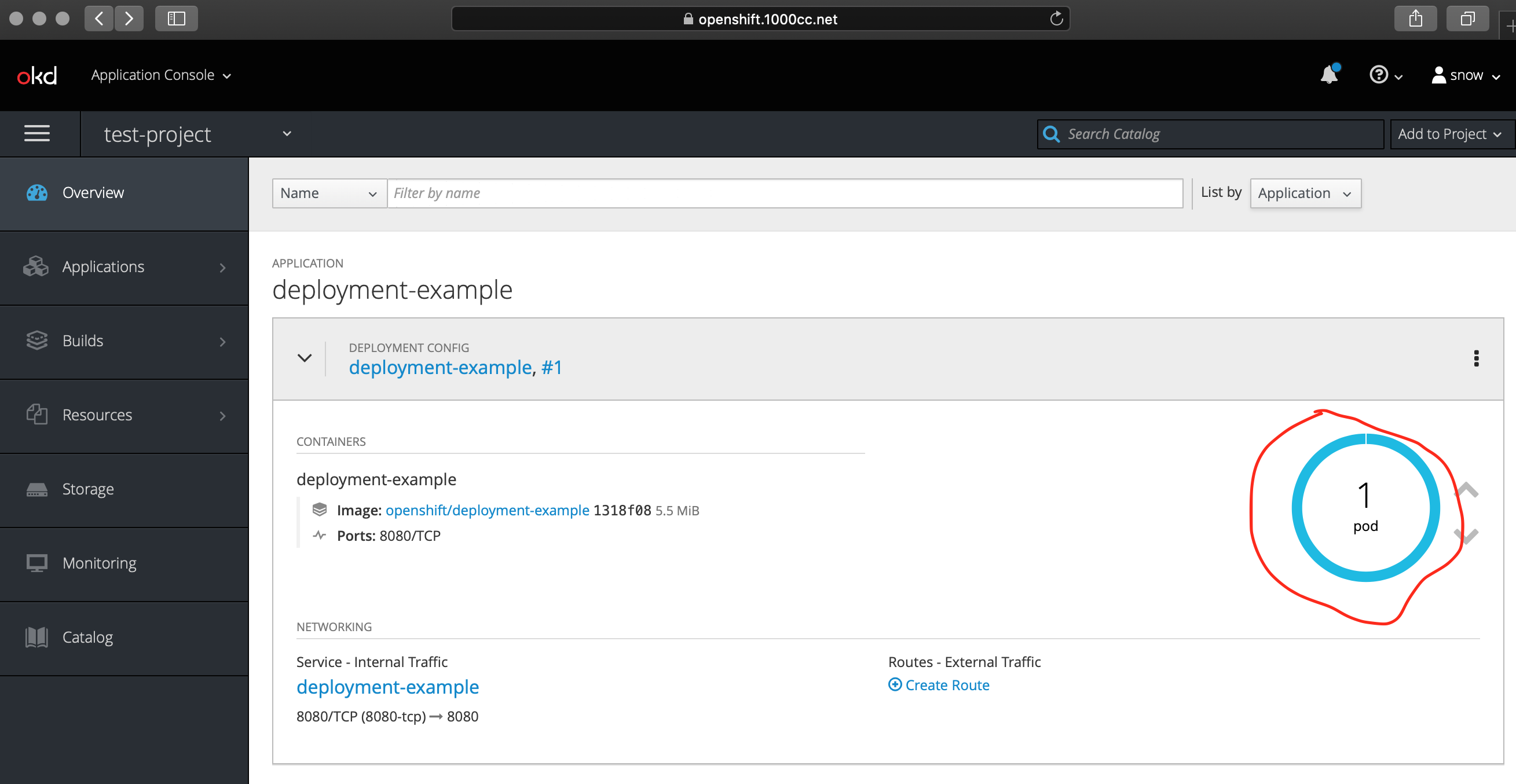

[snow@openshift ~]$ oc new-project test-project

# 或切换至test-project

[snow@openshift ~]$ oc project test-project

[snow@openshift ~]$ oc new-app centos/ruby-25-centos7~https://github.com/sclorg/ruby-ex.git

--> Found Docker image c5b6c39 (2 months old) from Docker Hub for "centos/ruby-25-centos7"

Ruby 2.5

--------

Ruby 2.5 available as container is a base platform for building and running various Ruby 2.5 applications and frameworks. Ruby is the interpreted scripting language for quick and easy object-oriented programming. It has many features to process text files and to do system management tasks (as in Perl). It is simple, straight-forward, and extensible.

Tags: builder, ruby, ruby25, rh-ruby25

* An image stream tag will be created as "ruby-25-centos7:latest" that will track the source image

* A source build using source code from https://github.com/sclorg/ruby-ex.git will be created

* The resulting image will be pushed to image stream tag "ruby-ex:latest"

* Every time "ruby-25-centos7:latest" changes a new build will be triggered

* This image will be deployed in deployment config "ruby-ex"

* Port 8080/tcp will be load balanced by service "ruby-ex"

* Other containers can access this service through the hostname "ruby-ex"

--> Creating resources ...

imagestream.image.openshift.io "ruby-25-centos7" created

imagestream.image.openshift.io "ruby-ex" created

buildconfig.build.openshift.io "ruby-ex" created

deploymentconfig.apps.openshift.io "ruby-ex" created

service "ruby-ex" created

--> Success

Build scheduled, use 'oc logs -f bc/ruby-ex' to track its progress.

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose svc/ruby-ex'

Run 'oc status' to view your app.

# 查看生成过程及push到registry的过程

[snow@openshift ~]$ oc logs -f bc/ruby-ex

[snow@openshift ~]$ oc status

In project test-project on server https://openshift.1000cc.net:8443

svc/ruby-ex - 172.30.253.178:8080

dc/ruby-ex deploys istag/ruby-ex:latest <-

bc/ruby-ex source builds https://github.com/sclorg/ruby-ex.git on istag/ruby-25-centos7:latest

deployment #1 deployed 22 seconds ago - 1 pod

pod/nginx-nfs runs fedora/nginx

3 infos identified, use 'oc status --suggest' to see details.

[snow@openshift ~]$ oc get pods

NAME READY STATUS RESTARTS AGE

nginx-nfs 1/1 Running 0 19m

ruby-ex-1-build 0/1 Completed 0 6m

ruby-ex-1-qbxxt 1/1 Running 0 1m

[snow@openshift ~]$ oc describe service ruby-ex

Name: ruby-ex

Namespace: test-project

Labels: app=ruby-ex

Annotations: openshift.io/generated-by=OpenShiftNewApp

Selector: app=ruby-ex,deploymentconfig=ruby-ex

Type: ClusterIP

IP: 172.30.253.178

Port: 8080-tcp 8080/TCP

TargetPort: 8080/TCP

Endpoints: 10.129.0.7:8080

Session Affinity: None

Events: <none>

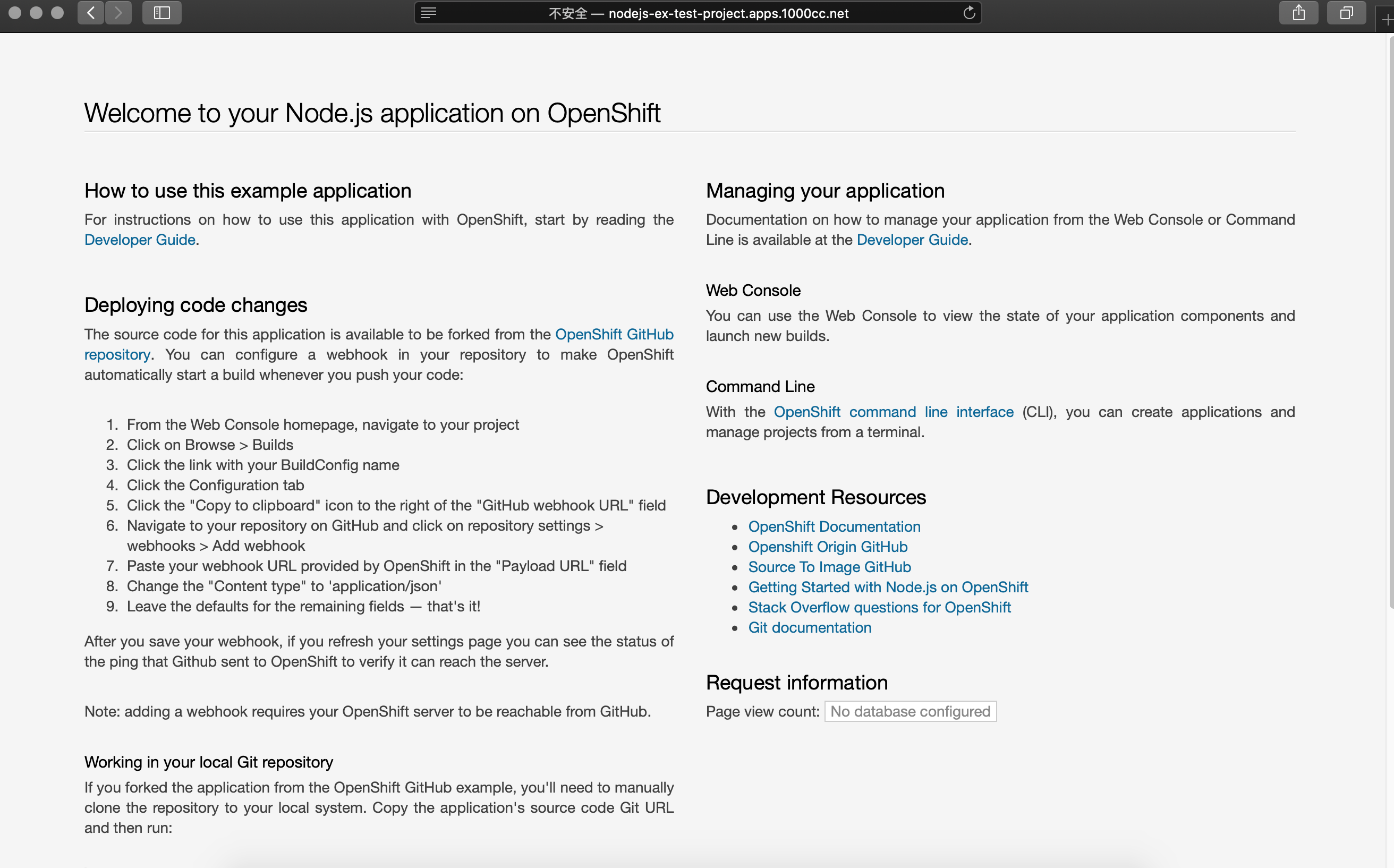

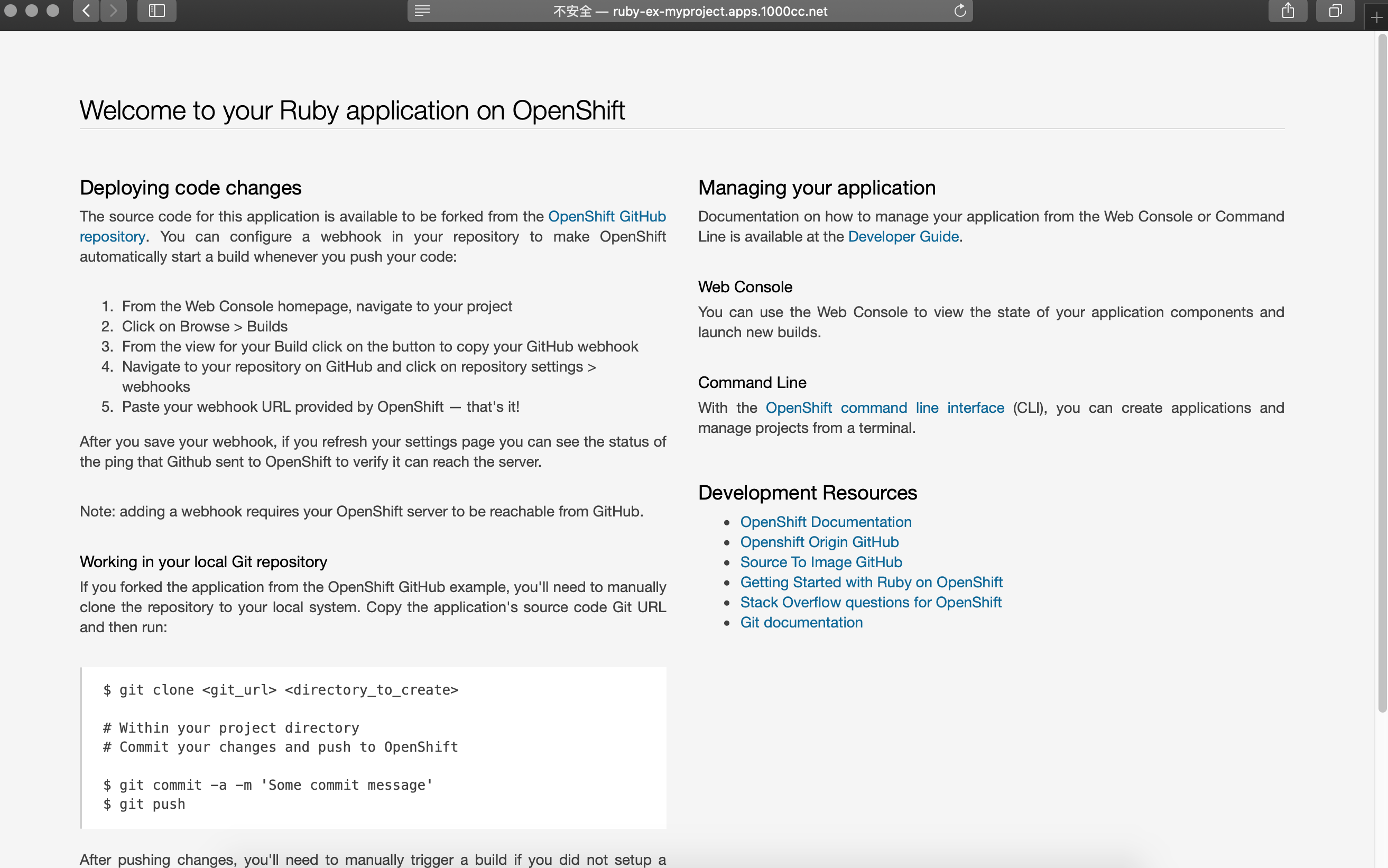

[snow@openshift ~]$ curl 172.30.220.163:8080

......

......

<section class='container'>

<hgroup>

<h1>Welcome to your Ruby application on OpenShift</h1>

</hgroup>

......

......

</body>

</html>

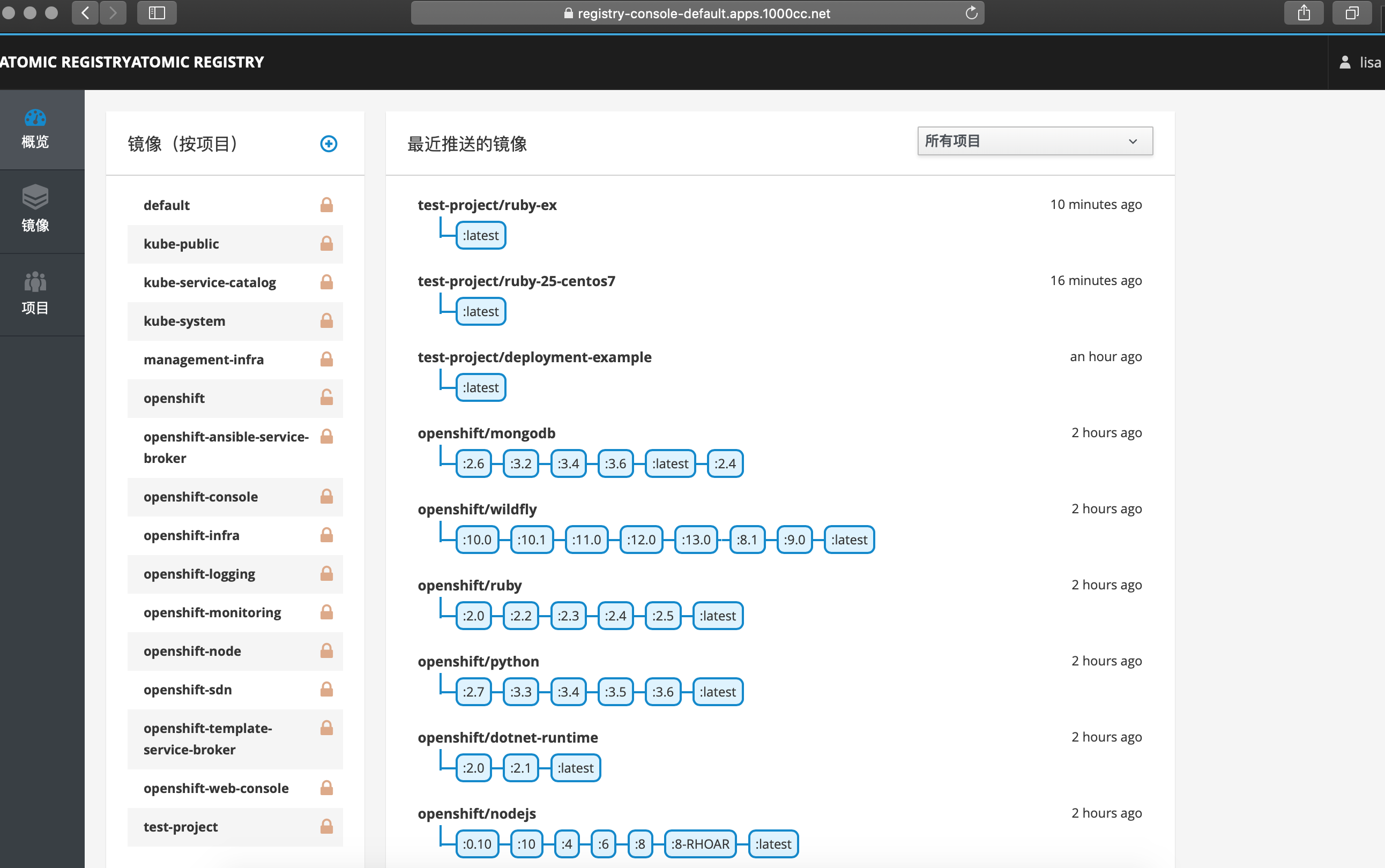

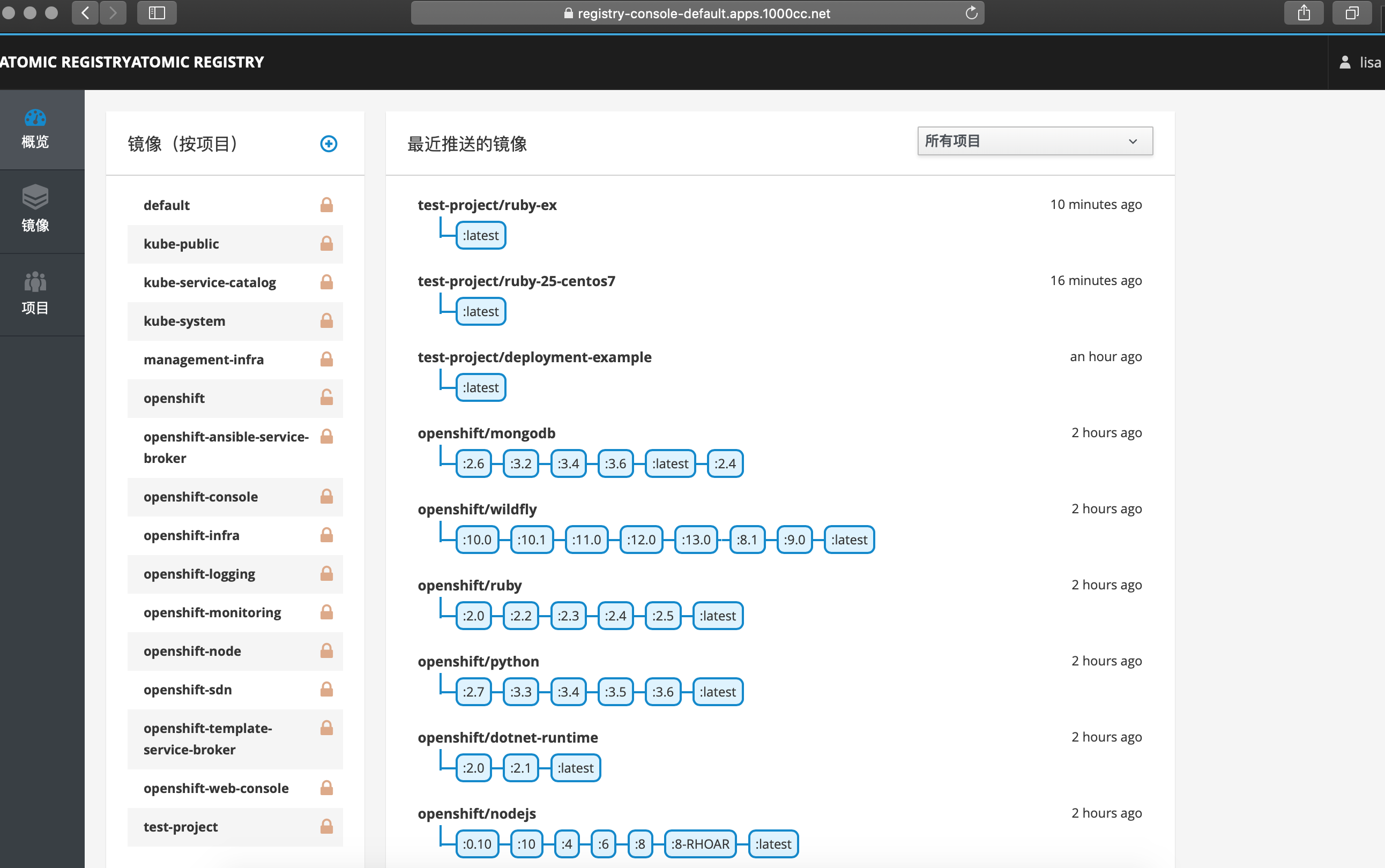

3 为registry开启WEB UI

(1) 确认存在registry-console

[snow@openshift ~]$ oc project default

[snow@openshift ~]$ oc get routes

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

docker-registry docker-registry-default.apps.1000cc.net docker-registry <all> passthrough None

registry-console registry-console-default.apps.1000cc.net registry-console <all> passthrough None

# 如果不存在,则用以下命令创建:

[snow@openshift ~]$ oc create route passthrough --service registry-console --port registry-console -n default

(2) 开启

[snow@openshift ~]$ oc new-app -n default --template=registry-console \

-p IMAGE_NAME="docker.io/cockpit/kubernetes:latest" \

-p OPENSHIFT_OAUTH_PROVIDER_URL="https://openshift.1000cc.net:8443" \

-p REGISTRY_HOST=$(oc get route docker-registry -n default --template='{{ .spec.host }}') \

-p COCKPIT_KUBE_URL=$(oc get route registry-console -n default --template='https://{{ .spec.host }}')

--> Deploying template "openshift/registry-console" to project default

registry-console

---------

Template for deploying registry web console. Requires cluster-admin.

* With parameters:

* IMAGE_NAME=docker.io/cockpit/kubernetes:latest

* OPENSHIFT_OAUTH_PROVIDER_URL=https://openshift.1000cc.net:8443

* COCKPIT_KUBE_URL=https://registry-console-default.apps.1000cc.net

* OPENSHIFT_OAUTH_CLIENT_SECRET=userYfvUst80IXsSodOEYqyEU8ypsFFqFxaQ666YKm7LXvxD3k1L6ev5t6Xwe8s1kvH8 # generated

* OPENSHIFT_OAUTH_CLIENT_ID=cockpit-oauth-client

* REGISTRY_HOST=docker-registry-default.apps.1000cc.net

--> Creating resources ...

deploymentconfig.apps.openshift.io "registry-console" created

service "registry-console" created

oauthclient.oauth.openshift.io "cockpit-oauth-client" created

--> Success

Application is not exposed. You can expose services to the outside world by executing one or more of the commands below:

'oc expose svc/registry-console'

Run 'oc status' to view your app.

[snow@openshift ~]$ oc get pods

NAME READY STATUS RESTARTS AGE

docker-registry-1-fp5kw 1/1 Running 0 1h

registry-console-1-khx2w 1/1 Running 0 1m

router-1-rq6k2 1/1 Running 0 22h

[snow@openshift ~]$ oc get routes

NAME HOST/PORT PATH SERVICES PORT TERMINATION WILDCARD

docker-registry docker-registry-default.apps.1000cc.net docker-registry passthrough None

registry-console registry-console-default.apps.1000cc.net registry-console passthrough None

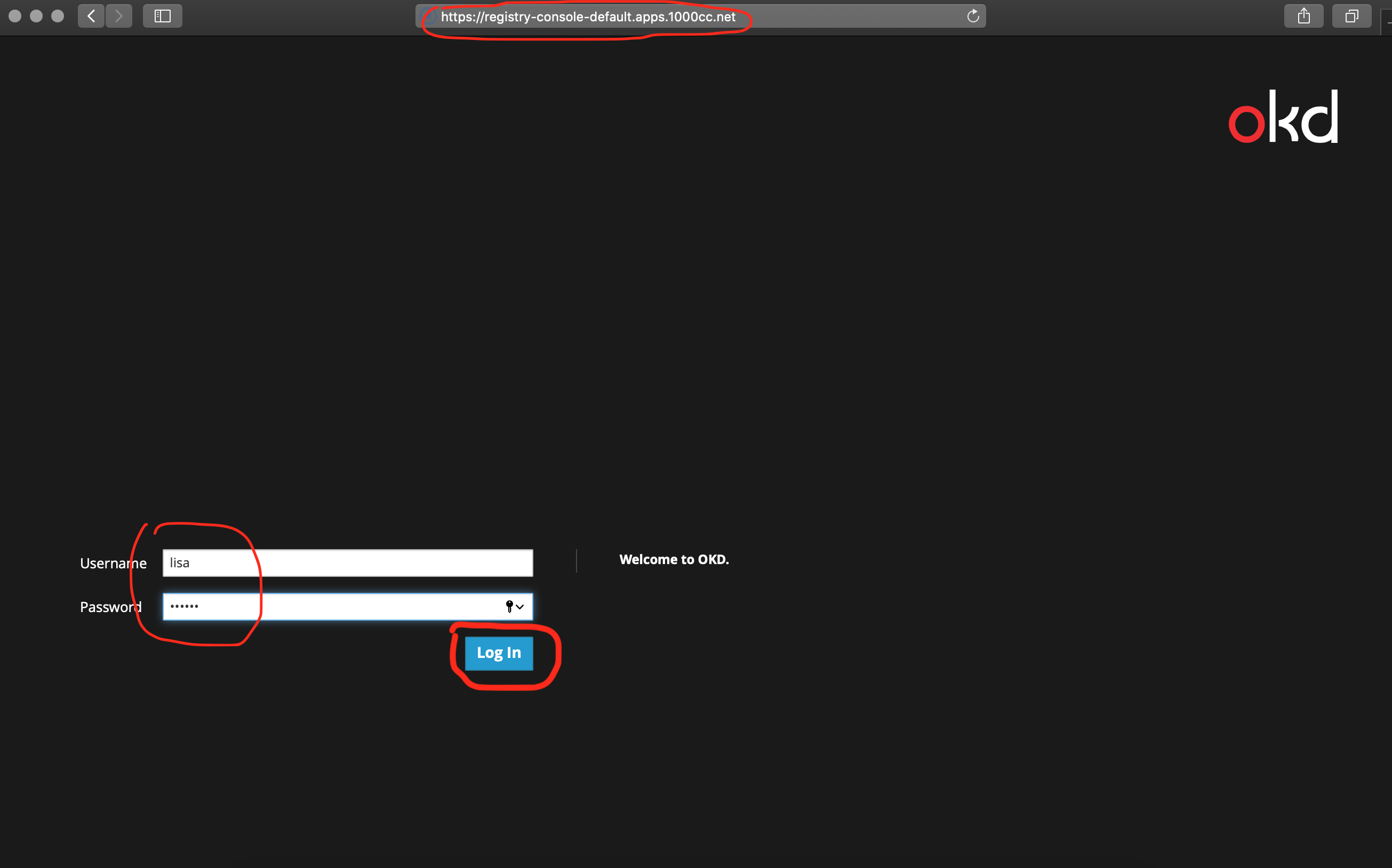

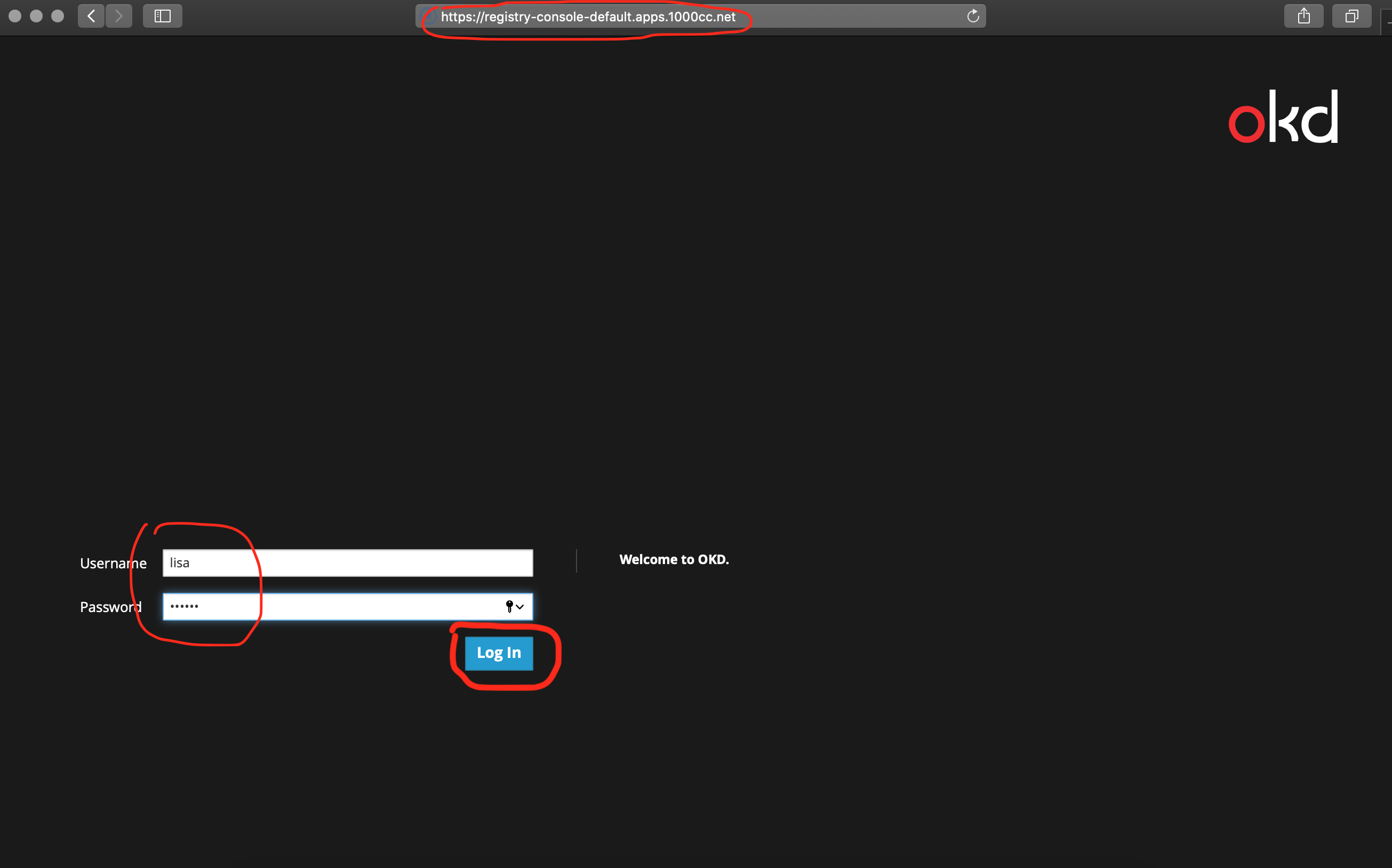

(3) 访问(注意FQDN解析,FQDN解析为Master(Openshift)节点的IP)

[浏览器]===>https://registry-console-default.apps.1000cc.net

|